環境が微妙に変わった?というような印象もあり、メモしておきます。

目次

Google Colab Proのそれぞれの使いどころ

- T4が一番性能が低い → 消費ユニット数を抑えたいなら、まずはT4で確認しながら進める

- A100はメモリと性能のバランスが良い → 本番はA100が良いかも

- L4 は性能が高い → 性能重視の処理であれば L4も選択肢に入る

- TPU v2-8はTensorFlowに最適化されたプロセッサ→ 行列演算・テンソル演算がやりたい時

基本環境

GPUなし状態で実施

Python バージョン:3.11.11

!python --version

Python 3.11.11CUDA: 12.5

!nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2024 NVIDIA Corporation

Built on Thu_Jun__6_02:18:23_PDT_2024

Cuda compilation tools, release 12.5, V12.5.82

Build cuda_12.5.r12.5/compiler.34385749_0Pytorch : 2.6.0+cu124

!pip show torch

!pip show tensorflow

Name: torch

Version: 2.6.0+cu124

Summary: Tensors and Dynamic neural networks in Python with strong GPU acceleration

Home-page: https://pytorch.org/

Author: PyTorch Team

Author-email: packages@pytorch.org

License: BSD-3-Clause

Name: tensorflow

Version: 2.18.0

Summary: TensorFlow is an open source machine learning framework for everyone.

Home-page: https://www.tensorflow.org/

Author: Google Inc.

Author-email: packages@tensorflow.org

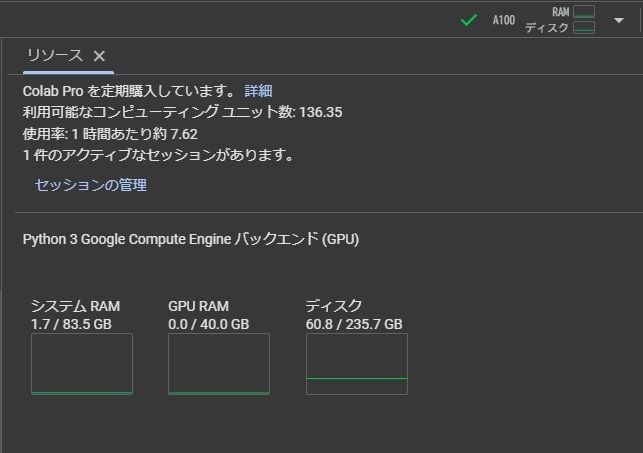

License: Apache 2.0A100選択時

1時間あたり約7.62 ユニットを消費

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.54.15 Driver Version: 550.54.15 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA A100-SXM4-40GB Off | 00000000:00:04.0 Off | 0 |

| N/A 33C P0 45W / 400W | 0MiB / 40960MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

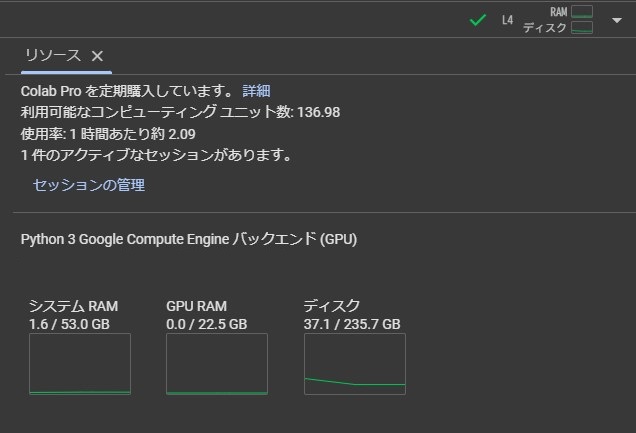

+-----------------------------------------------------------------------------------------+L4選択時

1時間あたり約2.09ユニットを消費

!nvidia-smi

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.54.15 Driver Version: 550.54.15 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA L4 Off | 00000000:00:03.0 Off | 0 |

| N/A 51C P8 12W / 72W | 0MiB / 23034MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

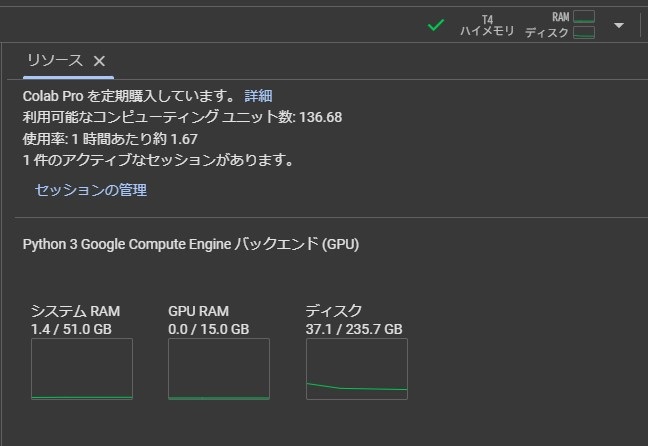

+-----------------------------------------------------------------------------------------+T4 選択時

1時間あたり 約1.67ユニットを消費

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.54.15 Driver Version: 550.54.15 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 49C P8 9W / 70W | 0MiB / 15360MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

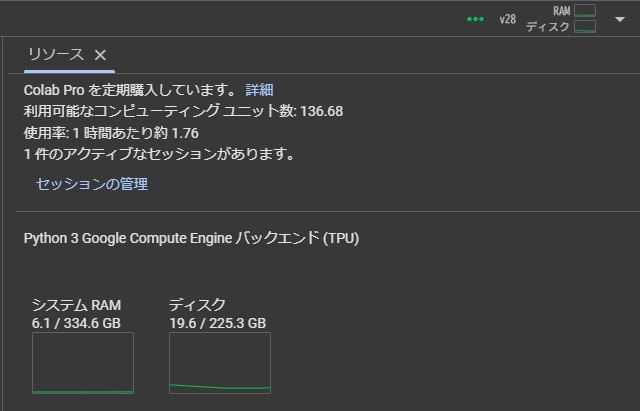

+-----------------------------------------------------------------------------------------+v2-8 TPU 選択時

1時間あたり約1.76ユニットを消費

import torch_xla.core.xla_model as xm

# TPU デバイスのメモリ情報を取得

mem_info = xm.get_memory_info('xla:0')

# メモリ情報の表示

print(f"TPU メモリ情報: {mem_info}")

#----

TPU メモリ情報: {'bytes_used': 32768, 'bytes_limit': 8034189312, 'peak_bytes_used': 32768}

# メモリ上限(bytes_limit)が 8034189312 bytes ≒ 7.48 GB = TPU 1コアあたりの上限。これ×8コア